Interconnect in the Age of AI: Investing in Upscale AI

Three infrastructure booms have defined the new millennium: Telecom in the early 2000s, Cloud in the 2010s, and AI today.

Each of these eras has created outsized opportunities for new companies to break through as buyer needs shift to serve emerging workloads. As we enter year three of the post-ChatGPT world, Celesta is excited to back Upscale AI in their $100M seed round, to deliver the most advanced open networking solutions for AI infrastructure.

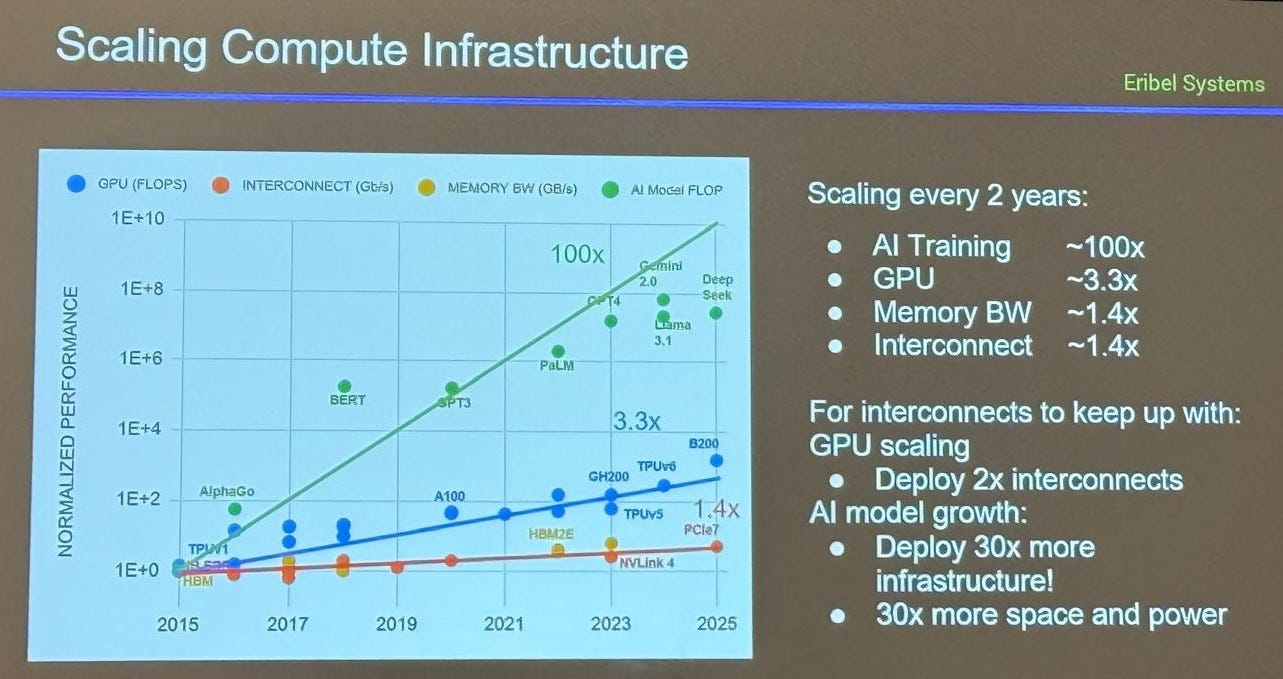

LLMs have quickly become a ubiquitous form of compute workload, creating unique challenges in networking. First, AI model training involves pre-training runs on massive data sets (essentially the entire internet) and increasingly large model sizes (e.g. 1T+ parameters). Training runs have scaled at breakneck speed, with infrastructure scrambling to keep pace.

Frontier AI labs race to build ever-larger accelerator clusters to support these training runs. For example, XAI boasts of its 200k GPU “Colossus” AI training cluster. Coordination and data exchange across that scale of GPUs over a multi-week training run makes networking bandwidth and latency make-or-break. Without adequate networking, multi-billion-dollar GPU investments deliver suboptimal returns.

The story is similar on the inference side as well. AI inference requires holding the model in memory. Because these models are so large, inference providers must connect multiple accelerators. To deliver snappy UX and high throughput, providers need low-latency, high-bandwidth communication between GPUs—again, the network is the performance ceiling.

Upscale is developing solutions for two key areas of AI networking: scale-up and scale-out, as well as full system solutions, and a software stack built on the open-source SONiC operating system.

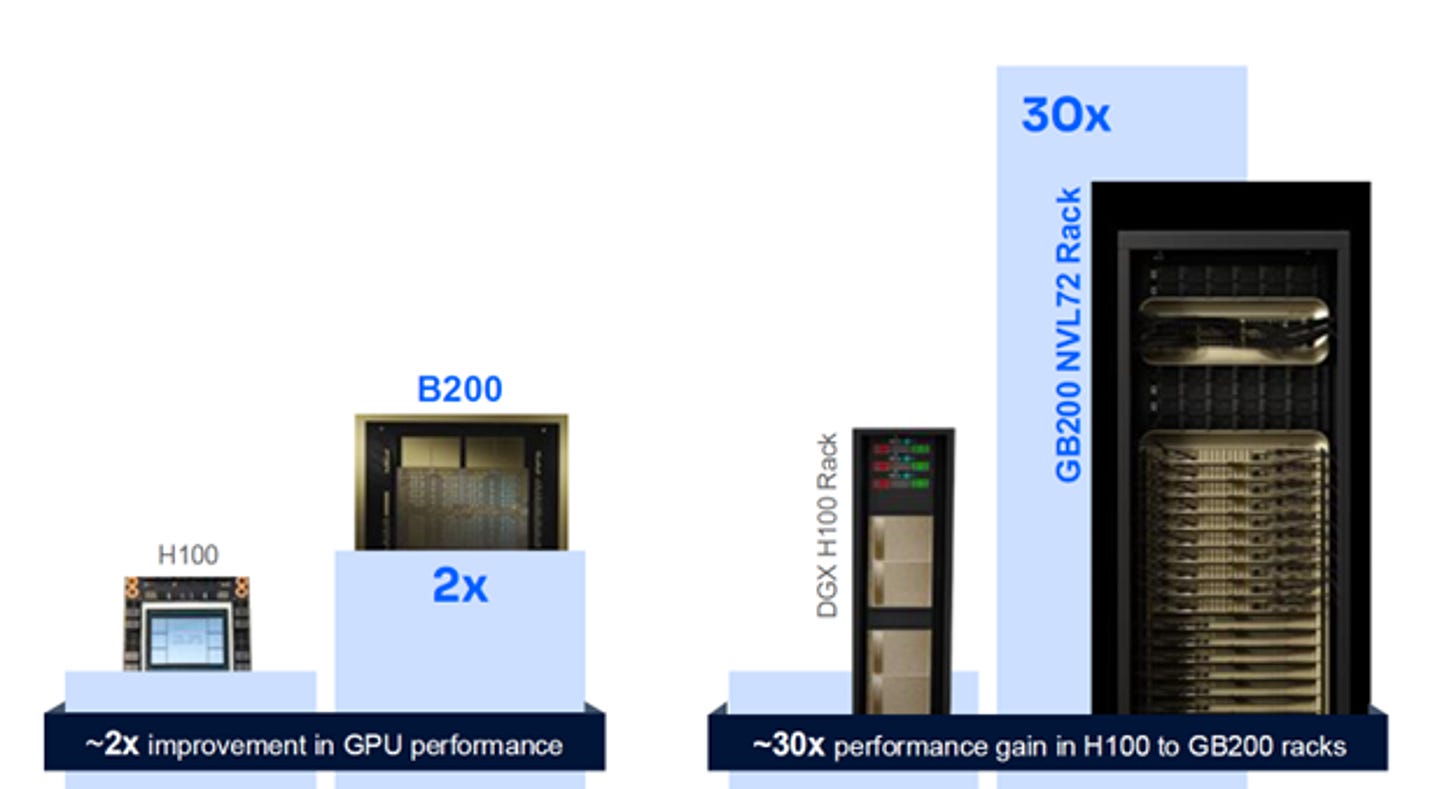

Scale-up links together multiple accelerators in a single “pod”, allowing them to function as a unified system and share memory. Nvidia’s NVLink is the market leader in this space and a major differentiator for the Nvidia-based systems like the DGX superpods. In the transition from the Hopper architecture to Blackwell, Nvidia delivered only 2x improvement in the GPU but was able to tout 30x improvements due to performance gains leveraging NVLink, their backplane interconnect at the rack level.

Scale-out is the process of connecting accelerators across racks to create large-scale systems. This market is primarily served by ethernet and infiniband today. The dominant switch vendors here are Cisco and Arista with Nvidia emerging. The merchant silicon for ethernet switching is almost entirely from Broadcom and Marvell.

Nvidia did $7.3Bn of networking revenue in the last quarter alone. Yet, the industry is waking up to the downsides of relying on proprietary standards for scale up and having a single vendor dominate in both processing and networking. The primary alternative to NVlink is using PCIe which is lightweight and open, but doesn’t run as fast as NVLink.

To solve this problem, an industry consortium got together to create UALink. UALink combines PCIe's transport layer with Ethernet's physical layer, delivering both simplicity and speed in an open standard. Open standards are the key in scale-out as well where Ultra Ethernet Consortium is defining a specification for AI ethernet.

Even though the problem – and even the technical spec – is known, developing switching silicon and systems is quite hard. The challenge intensifies with the need for unified software across scale-up and scale-out systems.

These converging challenges make Upscale AI particularly compelling. Upscale’s team combines silicon design talent from Innovium (now part of Marvell) where they delivered switches for the cloud era, along with leadership from Palo Alto Networks that have shipped systems and software at global scale.

Moreover, AI systems are high energy-density and require advanced cooling. Upscale enjoys a unique advantage through its spinout from Auradine, a leading Bitcoin mining systems company. BTC mining is one of the most energy and heat-dense forms of computing. Mining companies adopted liquid cooling before traditional compute due to extreme ASIC heat generation and clear economic benefits from overclocking. Upscale can take lessons from Auradine’s work to roll out liquid-cooled systems in the BTC market and apply them to systems in AI where liquid cooling is starting to meaningfully penetrate as of the Nvidia Blackwell platform.

The market is ready for solutions that can meet speed and cooling demands that are increasing at an unprecedented pace. Upscale AI will be the foundational company to push open standards-based networking to the forefront on performance and help usher in the era where network fabric scales as fast as the models.

It's a new era in AI infrastructure. One that doesn’t need to trade off between open standards and exceptional performance.